NVIDIA announced the world’s first commercially available Level 2+ automated driving system, NVIDIA DRIVE™ AutoPilot, which integrates multiple breakthrough AI technologies that will enable supervised self-driving vehicles to go into production by next year.

At CES 2019, leading automotive suppliers Continental and ZF announced Level 2+ self-driving solutions based on NVIDIA DRIVE, with production starting in 2020.

As a Level 2+ self-driving solution, NVIDIA DRIVE AutoPilot uniquely provides both world-class autonomous driving perception and a cockpit rich in AI capabilities. Vehicle manufacturers can use it to bring to market sophisticated automated driving features — as well as intelligent cockpit assistance and visualization capabilities — that far surpass today’s ADAS offerings in performance, functionality and road safety.

“A full-featured, Level 2+ system requires significantly more computational horsepower and sophisticated software than what is on the road today,” said Rob Csongor, vice president of Autonomous Machines at NVIDIA. “NVIDIA DRIVE AutoPilot provides these, making it possible for carmakers to quickly deploy advanced autonomous solutions by 2020 and to scale this solution to higher levels of autonomy faster.”

DRIVE AutoPilot integrates for the first time high-performance NVIDIA Xavier™ system-on-a-chip (SoC) processors and the latest NVIDIA DRIVE Software to process many deep neural networks (DNNs) for perception as well as complete surround camera sensor data from outside the vehicle and inside the cabin. This combination enables full self-driving autopilot capabilities, including highway merge, lane change, lane splits and personal mapping. Inside the cabin, features include driver monitoring, AI copilot capabilities and advanced in-cabin visualization of the vehicle’s computer vision system.

DRIVE AutoPilot is part of the open, flexible NVIDIA DRIVE platform, which is being used by hundreds of companies worldwide to build autonomous vehicle solutions that increase road safety while reducing driver fatigue and stress on long drives or in stop-and-go traffic. The new Level 2+ system complements the NVIDIA DRIVE AGX Pegasus system that provides Level 5 capabilities for robotaxis.

DRIVE AutoPilot addresses the limitations of existing Level 2 ADAS systems, which a recent Insurance Institute for Highway Safety study showed offer inconsistent vehicle detections and poor ability to stay within lanes on curvy or hilly roads, resulting in a high occurrence of system disengagements where the driver abruptly had to take control.(1)

“Lane keeping and adaptive cruise control systems on the market today are simply not living up to the expectations of consumers,” said Dominique Bonte, vice president of Automotive Research at ABI Research. “The high-performance AI solutions from NVIDIA will deliver more effective active safety and more reliable automated driving systems in the near future.”

Xavier SoC: Processing at 30 Teraops a Second

Central to NVIDIA DRIVE AutoPilot is the Xavier SoC, which delivers 30 trillion operations per second of processing capability. Architected for safety, Xavier has been designed for redundancy and diversity, with six types of processors and 9 billion transistors that enable it to process vast amounts of data in real time.

Xavier is the world’s first automotive-grade processor for autonomous driving and is in production today. Global safety experts have assessed its architecture and development process as suitable for building a safe product.

AI Inside and Out

The DRIVE AutoPilot software stack integrates DRIVE AV software for handling challenges outside the vehicle, as well as DRIVE IX software for tasks inside the car.

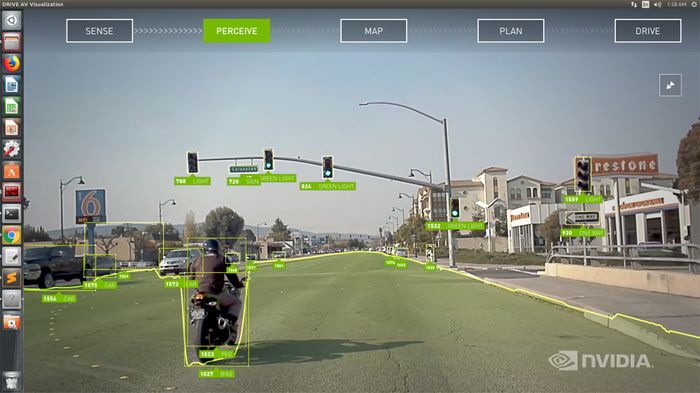

DRIVE AV uses surround sensors for full, 360-degree perception and features highly accurate localization and path-planning capabilities. These enable supervised self-driving on the highway, from on-ramp to off-ramp. Going beyond basic adaptive cruise control, lane keeping and automatic emergency braking, its surround perception capabilities handle situations where lanes split or merge, and safely perform lane changes.

DRIVE AV also includes a diverse and redundant set of advanced DNN technologies that enable the vehicle to perceive a wide range of objects and driving situations, including DriveNet, SignNet, LaneNet, OpenRoadNet and WaitNet. This sophisticated AI software understands where other vehicles are, reads lane markings, detects pedestrians and cyclists, distinguishes different types of lights and their colors, recognizes traffic signs and understands complex scenes.

In addition to providing precise localization to the world’s HD maps for vehicle positioning on the road, DRIVE AutoPilot offers a new personal mapping feature called “My Route,” which remembers where you have driven and can create a self-driving route even if no HD map is available.

Within the vehicle, DRIVE IX intelligent experience software enables occupant monitoring to detect distracted or drowsy drivers and provide alerts, or take corrective action if needed. It is also used to create intelligent user experiences, including the new ability for augmented reality. Displaying a visualization of the surrounding environment sensed by the vehicle, as well as planned route, instills trust in the system.

For next-generation user experiences in the vehicle, the AI capabilities of DRIVE IX can also be used to accelerate natural language processing, gaze tracking or gesture recognition.

Adopted by Industry Leaders

Continental is developing a scalable and affordable automated driving architecture that will bridge from Premium Assist to future automated functionalities. It uses Continental’s portfolio of radar, lidar, camera and Automated Driving Control Unit technology powered by NVIDIA DRIVE.

“Today’s driving experience with advanced driver assistance systems will be brought to the next level, creating a seamless transition from assisted to automated driving and defining a new standard,” said Karl Haupt, head of the Advanced Driver Assistance Systems business unit at Continental. “Driving will become an active journey, keeping the driver responsible but reducing the driving task to supervision and relaxation.”

ZF ProAI offers a unique modular hardware concept and open software architecture, utilizing NVIDIA DRIVE Xavier processors and DRIVE Software.

“Our aim is to provide the widest possible range of functions in the field of autonomous driving,” explained Torsten Gollewski, head of ZF Advanced Engineering and general manager of ZF Zukunft Ventures GmbH. “The ZF ProAI product family offers an open platform for the customized integration of software algorithms – covering conventional functions as well as AI algorithms and software running on NVIDIA DRIVE.”

Other posts by Mark Leo